Видео с ютуба Pyspark Write To Bigquery

GCP Create Dataproc cluster with Jupyter Notebook installed | Run PySpark Jobs from Notebook

#GCP_Data_Engineer Demo by Swagat | #bigquery #dataflow #dataprocessing #bigml #bigtable #pyspark

DE|GCP|Session-29|Deploying Pyspark Application, Integration in PySpark & Storing In BigQuery.

15 How Spark Writes data | Write modes in Spark | Write data with Partition | Default Parallelism

Apache Spark Real Time Scenario | Read BigQuery GCP from Databricks | Using PySpark

Loading Data into BigQuery from a Storage Bucket using Python APIs: Step-by-Step Guide | GCP | APIs

How to Read and Write data from Bigquery Using Dataproc cluster using gcloud CLI

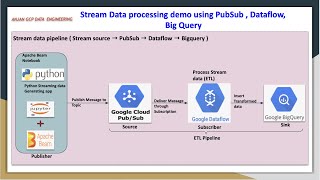

GCP Apache Beam stream data processing pipeline | Pub Sub , Dataflow , Big Query

PySpark Tutorial

Google Dataproc and BigQuey Project

GCP Dataproc create cluster using CLI | Run PySpark job through GCP console

Разница между Pandas и PySpark. #инженерияданных #большиеданные #spark #интервью #подготовка

PySpark - How to Load BigQuery table into Spark Dataframe

Pyspark app in 50 seconds #spark #pyspark #data #ingestion #transform #load #gcp #aws #azure